Easy Introduction to Reinforcement Learning

Reinforcement learning (RL) is a branch of machine learning that focuses on training computers to make optimal decisions by interacting with their environment. Instead of being given explicit instructions, the computer learns through trial and error: by exploring the environment and receiving rewards or punishments for its actions.

Together with supervised and unsupervised learning, reinforcement learning is one of three basic machine learning approaches. Reinforcement learning has a wide range of real-world applications, including robotics, game playing, and diagnosing rare diseases.

What is reinforcement learning?

Reinforcement learning (RL) is a way for computers to learn independently by making a series of decisions and learning from the outcomes. Through trial and error, computer programs determine the best actions within a certain context and optimize their performance.

The computer receives positive or negative feedback based on its actions and gradually learns how to complete a task. In other words, RL is about learning the optimal behavior in an environment to obtain maximum reward.

RL is an approach suitable for addressing problems involving a series of decisions that all affect one another.

Training a computer to win at backgammon, for example, involves a whole sequence of good decisions, not just one. In games like this, there are several possible actions and scenarios, and a lot of uncertainty regarding how short-term actions pay off in the long run. RL can also help solve complex problems of control, such as walking robots or self-driving cars.

Unlike the other two learning frameworks, which operate on the basis of an existing dataset, RL gathers data as it interacts with its environment. It allows a piece of software to find the optimal solution by exploring, interacting with, and ultimately learning from the environment.

In RLHF, a pre-trained language model (e.g., a chatbot) is assessed by humans, who score the responses it generates. By incorporating human feedback, experts can direct the model to favor certain outputs over others—for example, those that read more naturally or are more helpful.

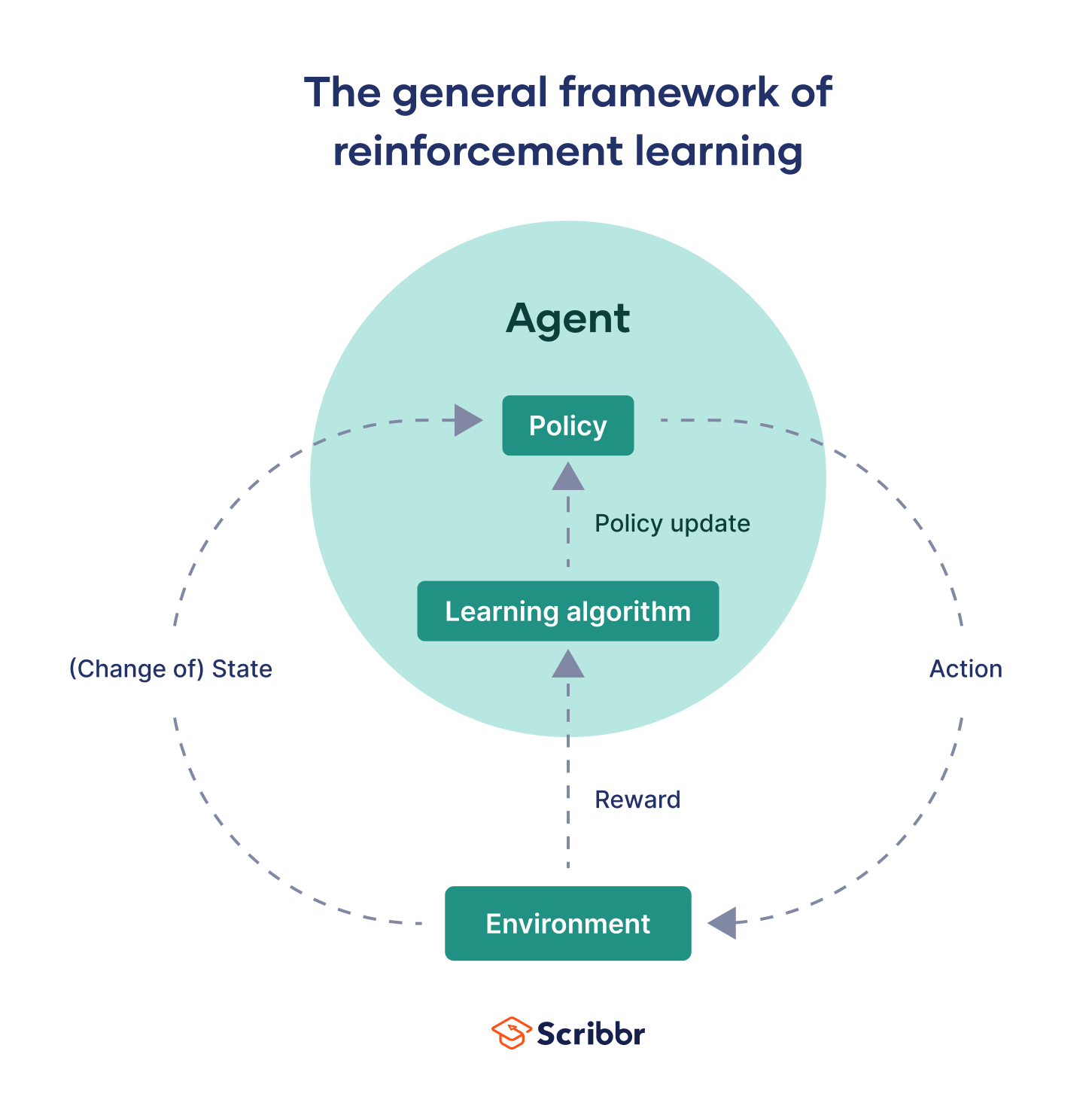

The elements of reinforcement learning

Reinforcement learning involves the following key elements:

- Environment is the context in which a computer program operates. This can be virtual, like a video game, or physical, like a house.

- Agent refers to the learner or decision-maker (i.e., the computer program) within the environment. The agent explores the environment and interacts with it.

- Action refers to moves taken by an agent within the environment.

- State is the current situation of the agent at a specific time. Each action leads to a change of state.

- Reward can be positive, to reinforce good behavior, or negative, to deter undesirable behavior. It is feedback that the agent receives from the environment for a certain action.

- Policy is how an agent behaves at a given time. It defines the mapping or path between different states or situations and actions, guiding the agent on what action to take next based on the current state.

- Value represents how beneficial a particular state is in the long run and helps the agent assess the desirability of different states or actions. The value is determined based on the potential rewards or penalties associated with a state or action. By estimating the value of different states or actions, the agent can define a policy that prioritizes actions and states with higher expected long-term benefits.

Additionally, algorithms are an integral part of the RL process and come into play in various steps. They are used to design the learning agent—i.e, its decision-making process, how it updates its policy, and how it learns from the feedback received.

- Environment is everything that constitutes the game’s world: the maze, the enemy (ghosts), the dots, the bonus items (fruits), the power pellets, etc.

- Agent refers to the eponymous character. The agent’s goal is to “eat” all the dots in the maze, avoid the ghosts, and maximize its score.

- Action refers to moves Pac-Man can make: moving up, down, right, or left.

- States can be different situations or scenarios, such as being in a corner of the maze with no dots nearby and several ghosts approaching. The positions of Pac-Man and the ghosts, the remaining dots, and whether ghosts are in blue mode (so that Pac-Man can chase them) all constitute states.

- Reward can be positive—e.g., receiving points for eating dots—or negative—e.g., losing a “life” if caught by a ghost.

- Policy can be a rule like “if a ghost is nearby, move in the opposite direction” or “if a dot is nearby, move towards it.”

- Value can be understood as the desirability of being in a particular state. The value of the state described above, where Pac-Man is cornered by the ghosts, would be low because Pac-Man is at risk of being caught and has little opportunity to increase the score by eating dots. A state where Pac-Man eats a power pellet and can chase the ghosts would have high value because it allows Pac-Man to collect many dots, eliminate the ghosts, and increase the score.

How does reinforcement learning work?

At the heart of reinforcement learning lies the concept of reinforcing optimal behavior or action through a reward system. Engineers come up with a method of rewarding desired behaviors and punishing unwanted behaviors.

They also employ various techniques to prevent short-term rewards from stalling the agent, delaying the achievement of the overall objective. This means defining rewards that align with the long-term objective so that the agent learns to prioritize actions that lead to the desired outcome.

Reinforcement learning is an iterative cycle of exploration, feedback, and improvement. The process can be better understood through this workflow:

- Define the problem

- Set up the environment

- Create an agent

- Start learning

- Receive feedback

- Update the policy

- Refine

- Deploy

As an example, let’s apply the RL workflow to a robotic vacuum cleaner:

- Define the problem: We want to train a robotic vacuum cleaner to effectively clean a room by itself.

- Set up the environment: We create a simulated environment that represents the room in which the robotic vacuum cleaner will operate, including the layout, furniture, and anything else in the room.

- Create an agent: The robotic vacuum cleaner is the learner or agent. We equip it with the right technology to sense the environment and move around.

- Start learning: At first, the robotic vacuum cleaner explores the room randomly, bumping into furniture or obstacles and cleaning parts of the room without a specific strategy. It gathers information about the room and how its actions affect cleanliness.

- Receive feedback: After each action, the vacuum cleaner receives either a positive or a negative reward, shaping its decision-making process over time. For example, if it successfully avoids colliding with furniture, there is a positive reward, while a negative reward is given when the cleaner moves aimlessly or covers the same spot repeatedly.

- Update the policy: Based on the received rewards, the robotic vacuum cleaner updates its decision-making strategy or policy to focus more on actions that lead to positive rather than negative rewards.

- Refine: The robotic vacuum cleaner keeps exploring the room, taking actions, receiving feedback, and updating the policy. With each iteration, it improves its knowledge of which actions maximize cleaning efficiency and avoid obstacles. Gradually, it also adapts to different room layouts.

- Deploy: Once the robotic vacuum has learned an effective policy, it applies it to clean the room autonomously.

Reinforcement learning compared to other methods

Reinforcement learning is a distinct approach to machine learning that significantly differs from the other two main approaches.

Supervised learning vs. reinforcement learning

In supervised learning, a human expert has labeled the dataset, which means that the correct answer is given. For example, the dataset could consist of images of different cars that an expert has labeled with the manufacturer of each car.

The learning agent has a supervisor, who, like a teacher, provides the right answers. Through training with this labeled dataset, the agent receives feedback and learns how to classify new, unseen data (e.g., car photos) in the future.

In reinforcement learning, data is not part of the input but is accumulated by interacting with the environment. Instead of telling the system in advance which actions are optimal to perform a task, reinforcement learning uses rewards and penalties. So the agent gets feedback once it takes an action.

Unsupervised learning vs. reinforcement learning

Unsupervised learning deals with unlabeled data, and there is no feedback involved. The goal is to explore the dataset and find similarities, differences, or clusters in the input data without prior knowledge of the expected output.

Reinforcement learning, on the other hand, involves exploration of the environment, not of a dataset, and the end goal is different: the agent tries to take the best possible action in a given situation to maximize the total reward. With no training dataset, the RL problem is solved by the agent’s own actions with input from the environment.

The following table shows the difference between supervised learning, unsupervised learning and reinforcement learning.

| Supervised learning | Unsupervised learning | Reinforcement learning | |

|---|---|---|---|

| Input data | Labeled: the “right answer” is included | Unlabeled: no “right answer” specified | Data are not part of the input, they are collected through trial and error |

| Problem to be solved | Used to make a prediction (e.g., the future value of a stock) or a classification (e.g., correctly identifying spam emails) | Used to explore and discover patterns, structures, or relationships in large datasets (e.g., people who order product A also order product B) | Used to solve reward-based problems

(e.g., a video game) |

| Solution | Maps input to output | Finds similarities and differences in input data to classify it into classes | Finds which states and actions would

maximize the total cumulative reward of the agent |

| General tasks | Classification, regression | Clustering, dimensionality reduction, association learning | Exploration and exploitation |

| Examples | Image detection, stock market prediction | Customer segmentation, product recommendation | Game playing, robotic vacuum cleaners |

| Supervision | Yes | No | No |

| Feedback | Yes. The correct set of actions is provided. | No | Yes, through rewards and punishments (positive and negative rewards) |

Reinforcement learning benefits and challenges

Reinforcement learning has several benefits as a training method:

Benefits

- Complexity. RL can be used to solve very complex problems involving high uncertainty, in many cases surpassing human performance. For example, an AI program called AlphaGo was the first computer program to defeat a human world champion in the ancient Chinese game of Go and is the strongest Go player in history.

- Adaptability. RL can handle environments in which the outcomes of actions are not always predictable. This is handy for real-world applications where the environment may change over time or is uncertain.

- Independent decision-making. With reinforcement learning, intelligent systems can make decisions on their own without human intervention. They can learn from their experiences and adapt their behavior to achieve specific goals.

Challenges and limitations

However, it also comes with certain challenges and limitations:

- Large data requirements. Reinforcement learning is “data-hungry”: it requires even more data than supervised learning, as well as many interactions, to learn effectively. Getting enough training data is time- and resource-consuming. In some cases, testing RL systems like autonomous vehicles solely in a real-world environment can be dangerous.

- Complexity of the real world. In real life, feedback might be delayed: for example, it may take months or years to know whether an investment decision paid off or not. Also, in an environment like a game world, the conditions under which the agent repeats its decision process don’t change, which is far from the realities of life.

- Difficulty of designing effective rewards. Data scientists may struggle to mathematically express a reward so that it mirrors how a certain action will help the agent get closer to a final goal. For example, if we want to teach a car to make a turn without hitting the curb, the reward function should take into account factors like the distance between the car and the curb and the start of the steering action. In other words, the closer the car gets to the curb, the lower the reward should be, to minimize the chance of collisions.

Other interesting articles

If you want more tips on using AI tools, understanding plagiarism, and citing sources, make sure to check out some of our other articles with explanations, examples, and formats.

Using AI tools

Plagiarism

Frequently asked questions about reinforcement learning

- What are some real-life applications of reinforcement learning?

-

Some real-life applications of reinforcement learning include:

- Healthcare. Reinforcement learning can be used to create personalized treatment strategies, known as dynamic treatment regimes (DTRs), for patients with long-term illnesses. The input is a set of clinical observations and assessments of a patient. The outputs are the treatment options or drug dosages for every stage of the patient’s journey.

- Education. Reinforcement learning can be used to create personalized learning experiences for students. This includes tutoring systems that adapt to student needs, identify knowledge gaps, and suggest customized learning trajectories to enhance educational outcomes.

- Natural language processing (NLP). Text summarization, question answering, machine translation, and predictive text are all NLP applications using reinforcement learning.

- Robotics. Deep learning and reinforcement learning can be used to train robots that have the ability to grasp various objects , even objects they have never encountered before. This can, for example, be used in the context of an assembly line.

- What is deep reinforcement learning?

-

Deep reinforcement learning is the combination of deep learning and reinforcement learning.

- Deep learning is a collection of techniques using artificial neural networks that mimic the structure of the human brain. With deep learning, computers can recognize complex patterns in large amounts of data, extract insights, or make predictions, without being explicitly programmed to do so. The training can consist of supervised learning, unsupervised learning, or reinforcement learning.

- Reinforcement learning (RL) is a learning mode in which a computer interacts with an environment, receives feedback and, based on that, adjusts its decision-making strategy.

- Deep reinforcement learning is a specialized form of RL that utilizes deep neural networks to solve more complex problems. In deep reinforcement learning, we combine the pattern recognition strengths of deep learning and neural networks with the feedback-based learning of RL.

- What is the exploration vs exploitation trade off in reinforcement learning?

-

A key challenge that arises in reinforcement learning (RL) is the trade-off between exploration and exploitation. This challenge is unique to RL and doesn’t arise in supervised or unsupervised learning.

Exploration is any action that lets the agent discover new features about the environment, while exploitation is capitalizing on knowledge already gained. If the agent continues to exploit only past experiences, it is likely to get stuck in a suboptimal policy. On the other hand, if it continues to explore without exploiting, it might never find a good policy.

An agent must find the right balance between the two so that it can discover the optimal policy that yields the maximum rewards.

Sources in this article

We strongly encourage students to use sources in their work. You can cite our article (APA Style) or take a deep dive into the articles below.

This Scribbr articleNikolopoulou, K. (2023, August 15). Easy Introduction to Reinforcement Learning. Scribbr. Retrieved January 8, 2024, from https://www.scribbr.com/ai-tools/reinforcement-learning/

Li, Y. (2018). Deep reinforcement learning. arXiv (Cornell University). https://doi.org/10.48550/arxiv.1810.06339

Naeem, M., Rizvi, S. S., & Coronato, A. (2020). A Gentle Introduction to Reinforcement Learning and its Application in Different Fields. IEEE Access, 8, 209320–209344. https://doi.org/10.1109/access.2020.3038605

1 comment

Kassiani Nikolopoulou (Scribbr Team)

July 14, 2023 at 2:28 PMThanks for reading! Hope you found this article helpful. If anything is still unclear, or if you didn’t find what you were looking for here, leave a comment and we’ll see if we can help.